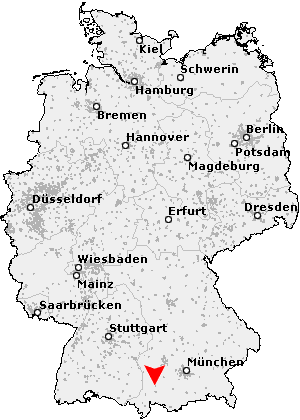

The third day in a Dagstuhl seminar traditionally has half day of trip outside the castle, this time we have traveled to a place called Metllach seen in the picture, we have sailed in a boat on the Saar river, and saw this island, and also went to a place from which we could view this island from the hill above, we also traveled to a winery and tasted six kinds of wine and heard long explanations (in German) about these wines.

The third day in a Dagstuhl seminar traditionally has half day of trip outside the castle, this time we have traveled to a place called Metllach seen in the picture, we have sailed in a boat on the Saar river, and saw this island, and also went to a place from which we could view this island from the hill above, we also traveled to a winery and tasted six kinds of wine and heard long explanations (in German) about these wines. In the morning we had one more breakout session, and a deep dive into topic 2: what are the functions of event processing (including non-functional function), though for some there was difference of opinions whether it is functional or non-functional (e.g. provenance).

There are discussions about the boundaries of event processing: are "actions" internal or external to event processing: they seem to be external, but for provenance and retraction, the event processing system should be aware of them. The team also identified a collection of topics that require further research, here is the list:

–

- Use of EP to predict (anticipate) problems

- Use of predictions (e.g. from simulations) in EP

- Complex actions

- Action processing as the converse of event processing

- Decomposition of complex actions with time constraints

- Goal directed reaction

- Adaptive planning

- Implicit validation

- Function placement and optimization

- Real-time machine generated specification

- Compensation and Retraction

- Privacy and Security

- Probabilistic events

- Provenance

There is another Dagstuhl tradition - to take a group picture, always in the same place, on the stairs of the castle's old church: